Will the polls get the general election wrong?

Welcome to the 109th edition of The Week in Polls (TWIP), and the first of the 2024 general election campaign. So what better topic to pick than whether the opinion polls might be wrong about the Conservatives being a long way behind and looking set for defeat?

Then it’s a look at the latest voting intention polls followed by, for paid-for subscribers, 10 insights from the last week’s polling and analysis. (If you’re a free subscriber, sign up for a free trial here to see what you’re missing.)

A quick note first that I’ve slipped in an extra update to PollBase, my set of data of voting intention polls all the way back to 1943. With the data being used all over the place (thank you to everyone making good use of it!), I’ve pushed out various small corrections to pre-2015 data, such as more precise dates, extra party approval figures and the like.

And a moment of praise too for this new style of pollsters tweeting, ‘no no, please don’t get too excited about our new poll’. Given they have to justify someone paying for their services, it’s easy to understand why pollsters can be a bit prone to ‘don’t pay too much attention to anyone one poll…. BUT LOOK AT MY NEW ONE JUST OUT!’. It may just be random noise, but I detect a trend of pollsters being more willing to avoid that and dampen down reactions. More, please.

Been forwarded this email by someone else? Sign up to get your own copy here.

Want to know more about political polling? Get my book Polling UnPacked: the history, uses and abuses of political opinion polling.

Will the polls get the general election result wrong?

One of my tasks in a previous general election was to organise mass nationwide purchase of Charles Kennedy gnomes. The idea was to purchase enough gnomes to move the ‘gnome poll’ being carried out by a leading supermarket across marginal seats.

We bought many gnomes, we gained seats and I still have two CK gnomes, one in the garden and one visible over my shoulder on Zoom calls.

We can agree though, I hope, that gnome purchases are not a great electoral predictor.

What, however, about opinion polls? Will the polls be right this time around? Or will pieces like this have to be written after polling day:

What does getting it wrong mean?

Pollsters, understandably, often preferred to be judged by how close or not their voting figures are for each party compared with the actual election result. The average of all those errors is the standard benchmark.

But in practice, that’s not what most people are looking for from the polls. They don’t worry so much about how far out the polls are for the smaller parties.1 Nor really do they mind so much about the vote shares as long as the overall picture is roughly right.

It is why the big misses on vote shares by some pollsters in 1997 did not trigger much angst, because they all - correctly - had Labour on course to win big. It’s also why the pretty small misses on vote shares by many pollsters in the Brexit referendum did trigger plenty of angst - because they mostly had Remain ahead.

So I’m going to use 'getting it right/wrong’ in that loose sense. Sorry to those pollsters who don’t like that approach.2 The polls show the Conservatives a long way behind and headed to defeat. The polls might close, but if they don’t, is that going to be reality or might there be a big polling miss?

Overall polling accuracy

Let’s start with the question of whether polls are worth putting much faith in generally. Do they get things right normally? Yes, yes they do.

As I wrote about in my book about polling, Polling UnPacked:

The most comprehensive international study of the reliability of opinion polling [was] carried out by Will Jennings and Christopher Wlezien. Being academics rather than pollsters or media outlets who commission polls, Jennings and Wlezien went into their research with no preferred outcome. For them, finding polls are accurate or inaccurate would have made for an equally good research finding. What they found was good for pollsters: “Our analysis draws on more than 30,000 national polls from 351 general elections in 45 countries between 1942 and 2017 . . . We examine errors in polls in the final week of the election campaign to assess performance across election years . . . We find that, contrary to conventional wisdom, the recent performance of polls has not been outside the ordinary.”…

The average polling error – the gap between the vote share figures in the final pre-election polls and what the parties or candidates secured – has been just 2.1 per cent across 1942–2017. That is pretty darn close. Nor has the rate of error been rising, for the average since the year 2000 is 2.0 per cent. Indeed, there are signs of the error rate being on a very gently downwards long-term trajectory. Nor does zooming in to just 2015–17 reveal a spike in the average error levels.

Overall, the polls are good and those sort of typical errors are not enough to trouble the overall picture of the polls at the moment.

The record at British elections

A different way of looking at the error rate in polls is to ask in how many general elections polls have got it wrong here in Britain.

We’ve had 21 general elections with polls and of those, three have seen spectacular polling misses: 1970, 1992 and 2015.

The track record therefore is that 6 times out of 7 the polls are, roughly, right and 1 time in 7 they lead us all astray. With general elections every four years or so, that means we can expect egg on the face of pollsters typically around once every 28 years.

It’s why, despite being a big believer in the usefulness of polls, especially when compared to other metrics such as gnome purchases, I also hope to live through a couple of big polling misses during the rest of my life. I hope you do too.

But if I had to stake my life on it, I’d pick the 6-in-7 option rather than the 1-in-7 option.

Moreover, all three of those disasters saw errors on the Labour/Conservative lead of a scale that, even if repeated this year, would still leave the Conservatives well behind. Unless the polls close to bring that lead down, we not only we need a rare error, it would have to be of unprecedented scale.

Are there any clues that such an error could be on the way?

Polls at recent local elections and by-elections

Courtesy of the Mayor contests in particular but also Parliamentary by-elections, we’ve had quite a few polls recently that were tested against reality.

Both sorts of contests are tougher for pollsters to get right than a general election as, on average, the higher the turnout, the more accurate polls are.

Even so, the polls came out fine from those tests, typically in the Mayor contests being around 3 points too generous to Labour on its lead over (or deficit behind) the Conservatives. I summarised the polls for May as, “A decent set of polls then, pretty good though not brilliant.”

Likewise, Parliamentary by-election polls have done reasonably. Polling usually is done early or mid campaign, so we don’t know which polling ‘errors’ are really just down to movement in support before the votes are all cast. Hence although there have been three by-elections in recent years in which the last poll to run during the campaign got the winner ‘wrong’, that isn’t nearly as bad as it may sound given the likelihood of movements in reality in the closing days of by-elections.3

What we do know with greater certainty is that the results in Parliamentary by-elections in the second half of this Parliament have been the sort seen ahead of governments crashing to defeat.4 The run up to the 2015 and 1992 polling misses saw the government doing better in by-elections than it is now, so that’s one warning sign that isn’t being repeated.

Are the 2015 clues present?

Matt Singh made his name in the polling community by, before the 2015 general election result was known, writing that, “while I would urge caution with respect to this analysis, it looks a lot like the Tories are set to outperform the polls once again.”5

He was right, and the polls were wrong.

What did his analysis spot that correctly foretold the polling error?

He found a pattern of the polls tending to over-estimate Labour and under-estimate the Conservatives.6 That matches the warning sign from the Mayor polls mentioned above, though not on a scale to worry us this time around, unless the polls close considerably.

He speculated that the unusual fluidity of the electorate in 2010-15, with the Lib Dems declining and Ukip surging, may have knocked off some of the pollster models. This Parliament, if the polls are right, has seen some big moves, though in the more straightforward pattern of the government falling and the main opposition rising. Even if you add in the rise of Reform recently, overall it is not obvious why this would be a pattern to undo the methodologies that got 2019 right.

The big one: “many have noted that for almost all of this parliament, the electorate’s voting intention has been at odds with its views of party leaders and economic competence … to quote YouGov President Peter Kellner: ‘I can find no example of a party losing an election when it is ahead on both leadership and economic competence.’ … but I can find an example of a party being ahead on the economy and ahead on leadership, but unable to pull meaningfully ahead in opinion polls. That was the Tories in – you guessed it – 1992.” That is not where we are now. The Conservatives are not leading on the economy and Sunak is not leading on leadership. For all the accurate talk about how Labour and Starmer’s leads on measures other than voting intention often aren’t great, we’re not in a situation like that of pre-2015 or pre-1992.

He also compared mid-term local election national vote share calculations with the subsequent election result, projecting that the Conservative performance in local elections in the 2010-15 Parliament was indicating a much better result than the polls were suggesting. This is a tricky one as looking at his key graph, you could take the local election results in this Parliament as pointing to the Conservatives being about to out-perform their polls. But predicting from local election vote shares to general elections is a much contested area and other more recent analysis either concludes that there is not much of a relationship between the two or that, to quote an earlier edition of TWIP, “A more straightforward piece of analysis by Will Jennings, comparing NEV scores with subsequent general election results, also shows that this May is not a pointer towards the polls being wrong. Similarly, Paul Whiteley’s modelling of local election results versus subsequent general election results point to a Labour majority (though a small one).” Moreover, for 2015 this was one of several risk factors identified by Matt Singh, all of which pointed to a possible poll error. This time there isn’t a similar unanimity of warning signs.

Or as Matt put it to me over the weekend about whether we should be worried about that possible local election vote share clue of an impending opinion poll miss:

I think the key differences [between now and 2015] are that it’s only one thing rather than everything, and in terms of “real votes”, chiefly Westminster by-elections, this looks nothing like 2015 (both Labour and Lib Dems have been getting mid-90s level swings)

I also think there are other explanations for the narrower gap in local elections than you’d expect given the polls. We know for sure that the squeezable votes are much more on the left (or at least, lean Labour over Conservative right now). The system is more fragmented, ticket-splitting may be higher, the age-based realignment way have moved lower turnout elections to the right, the anti-governing party bias may be less than it was, and so on.

So I think there are too many things that do fit with the polling to think that a major miss is likely.

Will the electoral system get it wrong?

One way the polls can be wrong, while being right, get the big picture wrong because our voting system serves up a winner from the party who got fewer votes.

If anything, the signs are the other way on this. Numerous analysis of likely tactical voting trends, the distribution of Labour support and the concentration of Lib Dem support all in their various ways point to the likelihood of the Conservatives under-performing on their seat numbers relative to vote share rather than over-performing. There are, for example, widespread predictions from outside their party of the Lib Dem seat number tally over-performing against national vote share.

Any other warning signs to be found?

The sort of changes that can challenge pollsters, such as a big change in turnout, a shake-up in the party system or the rise of a large new populist voice, don’t seem to be around. Reform of course is new, but from a pollster methodology perspective they are not so different from what we had in 2019 and earlier.

Voter ID has been much talked and worried about. Yet so far there’s little sign of it bringing a poll-busting benefit to any one party, both because it has had little effect on turnout up till now and what effect there has been has hit Conservative would-be voters too. There has not been any significant net partisan advantage in election results from this policy, so far.

Another risk is that perhaps we’ll find that our pollsters are not all as varied as it appears. The combination of the rise of online polling and the secrecy over the sourcing of panels for those polls means it is possible that there is a shared panel supplier to many pollsters and whose data is off.

If there were to happen, it would mean one problem - one panel supplier serving up biased samples - would knock off multiple pollsters. But those pollsters who either have their own panels or don’t use such panels, such as because they do phone polls, aren’t getting much of a different picture from those who might be susceptible to such a risk.

James Kanagasooriam talked up other possible problems earlier this year:

The next UK election - in terms of vote % and seat counts - is going to be a tough one for the polling industry to nail; new census, new boundaries, 5 years since last elex driving false recall, proportional swing, tactical voting. An absolute perfect storm of potential error.

New census data not yet being available was part of the story of the 1992 polling miss, but this election is 2024, a further two years on from the preceding census. New boundaries, proportional swing and tactical voting may provide problems for seat projections, but they shouldn’t be big problems for vote shares and leads. False recall may be an issue, but some pollsters, notably YouGov, do have records from the time of how people said they would vote in 2019 rather than what they now say they remember they did.

So none of this seems to add up to a serious risk this time.

Nor are we seeing a big movement in the polls, something that featured in the collection of so-called polling misses that Labour’s Morgan McSweeney put together in a deck to battle Labour complacency. Going by at least the Financial Times’s reporting of the deck, he scoured the world over multiple years, finding only four examples, in three of which where there was a clear and big movement in the polls, and in one of which where the national polls were actually right.7

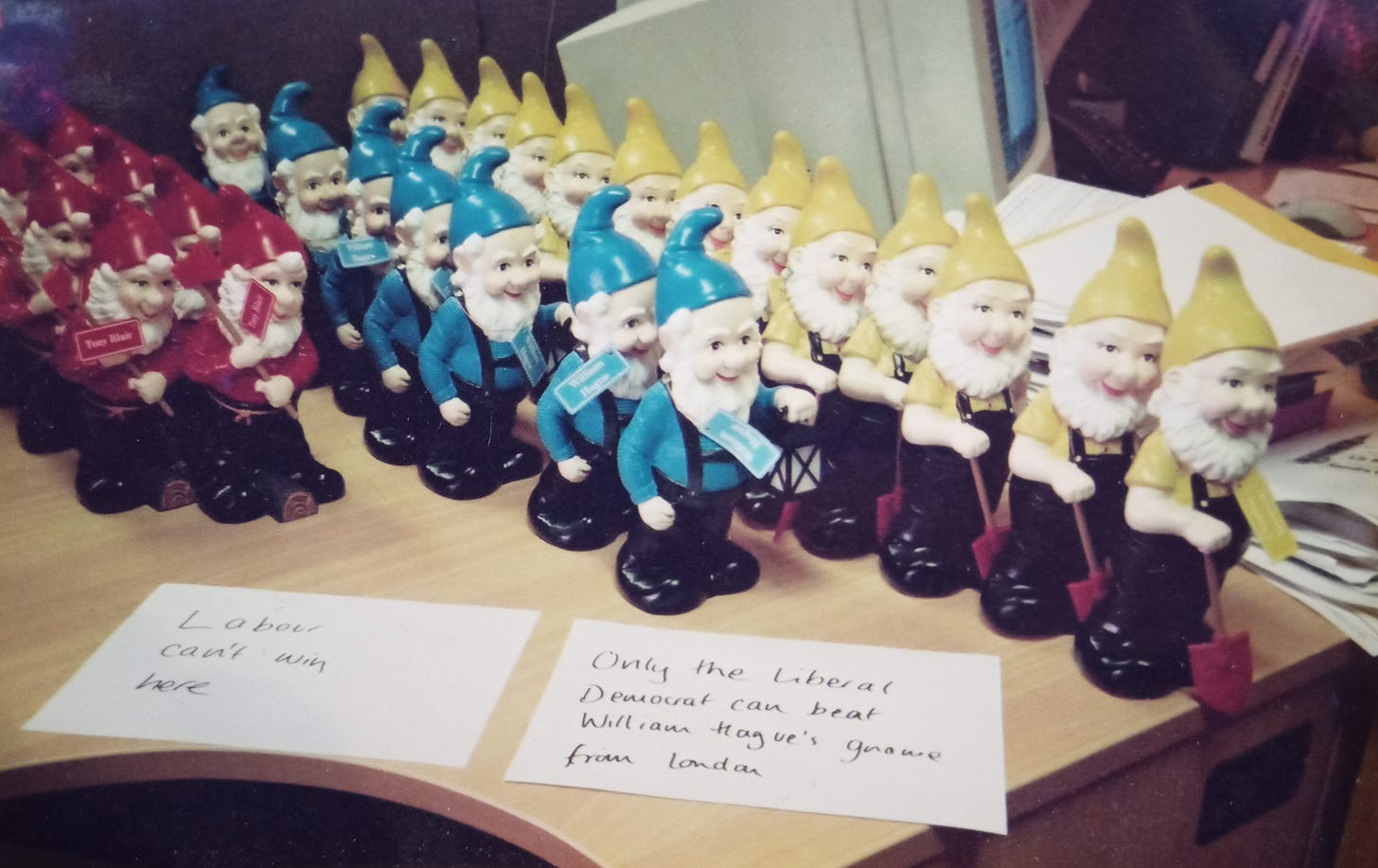

Which leaves two genuine worries. First, there is still a big range in Conservative poll deficits between different pollsters. In particular, the differing ways pollsters treat the don’t knows has a noticeable impact on their figures, yet the overall picture is the same even so:8

Those divergences may narrow as the number of don’t knows declines. But if the polls with differing methodology don’t converge on each other… and if the Conservatives rise in the polls so that the spread is no longer between a massive and a huge Labour lead but between a narrow and a comfortable one, then that could get worrisome. Could get, but only if that double if becomes true.

Second, past polling errors don’t generally happen because one thing went wrong. They happen because lots of things went wrong, unluckily adding up in the same direction. As I put it for Polling UnPacked:

Polling is not a search for the perfect methodology that, once found, will solve everything. Rather, it is a constant battle against circumstances, adjusting as it goes, trying to stay close to the truth. As we have explored, it is rare that just one thing goes wrong for political polling. Instead, when the polls go wrong, it requires the bad luck of multiple events, stacking up to push the polls of in the same direction, and so adding up to a headline-grabbing error.

You can certainly tally up the factors I’ve run through and come up with a list of possible problems that might, just might, line up and cause a big polling miss.

In conclusion…

Crucially, though, as Deltapoll’s Matthew Price and Martin Boon wrote in their email newsletter this week:

Even the largest change in lead during a campaign (eleven points in 2017) plus the largest polling error in recent history (seven-and-a-half points in 1992) would not be enough to close the gap. Labour would still win the most votes, and the most seats.

There’s the six in seven chance the polls won’t be badly out, and even if they are, they’d have to be out by more than they’ve ever been, for reality to contradict the headline picture from the polls.

The big Conservative poll deficit therefore, most likely, is a foreshadowing of a bad election night for the Prime Minister.

National voting intention polls

As mentioned last time, there was something odd with the last PeoplePolling data tables. They, and their headline figures have now been corrected and we also have the first polls carried out since the election was called:

For more details and updates through the week, see my daily updated table here and for all the historic figures, including Parliamentary by-election polls, see PollBase.

Last week’s edition

The public is often smarter than the headlines suggest.

My privacy policy and related legal information is available here. Links to purchase books online are usually affiliate links which pay a commission for each sale. Please note that if you are subscribed to other email lists of mine, unsubscribing from this list will not automatically remove you from the other lists. If you wish to be removed from all lists, simply hit reply and let me know.

Will the don’t knows rescue the Conservatives?, and other polling news

The following 10 findings from the most recent polls and analysis are for paying subscribers only, but you can sign up for a free trial to read them straight away.

Keep reading with a 7-day free trial

Subscribe to The Week in Polls to keep reading this post and get 7 days of free access to the full post archives.