The myth of George Gallup

Welcome to the 72nd edition of The Week in Polls, which has therefore been going for longer than 14 British Prime Ministers have held office. This time I’m taking a dive back in time, to some of the the truth behind the popular hagiography about pioneering pollster George Gallup.

Then it’s a look at the latest voting intention polls followed by, for paid-for subscribers, 10 insights from the last week’s polling and analysis. (If you’re a free subscriber, sign up for a free trial here to see what you’re missing.)

As ever, if you have any feedback or questions prompted by what follows, or spotted some other recent polling you’d like to see covered, just hit reply. I personally read every response.

Been forwarded this email by someone else? Sign up to get your own copy here.

Want to know more about political polling? Get my book here.

Or for more news about the Lib Dems specifically, get the free monthly Liberal Democrat Newswire.

The myth of George Gallup

A popular piece doing the rounds in the last week or two is from Anton Howes about the frailty of history knowledge, highlighting how rarely sources cited by historians are checked and how often, when they are, problems are found. An historical version of the replication crisis in science as it were:

My first encounter with this issue came back when I was doing a PhD on 19th century elections. I had come up with a new estimate for the size of the electorate just before the 1832 Great Reform Act. Aside from the quality of my own evidence and argument, I had the idea of going back to the previous best estimate and, rather than simply asserting that mine was better, trying to find a specific flaw with how it had been calculated.

On doing that, and with the knowledge of the data sources I’d acquired over several years of staring at microfiches,1 I found the flaw.

The flaw was a form of double-counting due to the confusing way that in the early nineteenth century people in Britain did not have clear vocabulary choices to distinguish between what we would now call the electorate (i.e. everyone qualified to vote) and voters (i.e. those who actually voted at an election, a subset of the electorate). Instead, ‘voters’ was used interchangeably to mean both. So if you wanted to work out the electorate and had ‘voters’ figures for each constituency, some had to be taken as given and some had to be adjusted upwards to account for turnout.

What I spotted was that the previous authoritative estimate had adjusted all the figures, inflating the size of the electorate calculation (and so making the 1832 Great Reform Act look less great by reducing its apparent impact in increasing the electorate).2

In fairness to the mistake and everyone who had read it since and not spotted the mistake, I hadn’t first time around either. It was only when I went back determined to find a problem - and armed with relevant specialist knowledge3 - that it leapt out at me.

I was unfashionably early to the replication crisis in history. More recently, I had a similar experience when writing my book about political polling and looking at the record of George Gallup.

If you know a bit about the history of polling you probably know something like the following. In 1936, a magazine - the Literary Digest - asked its readers to write in to say who they were going to back for US President. The result was a predicted Republican landslide. But George Gallup also ran the first modern, scientific poll at an election. He said the Democrats would win in a landslide. Not only that, he predicted how wrong the magazine’s figures would be before they came out. The result? Gallup was right about the election and right about the magazine’s error. Modern polling had shown its superiority and reader self-surveys died off as a way of calling elections.

In this story, Gallup is the scientific pioneer who got it right. Yet it’s also a story that is as much, if not more, Gallup public relations puffery as it is truth. Even sources that are as sceptical of PR and spin doctors as the Wikipedia editing community give you an uncritical version of Gallup’s self-aggrandising.

It’s the story I used to believe too, until I went checking some sources and asking some simple questions, such as ‘what vote shares did Gallup’s polls show and how did they compare to the result?’.

As I wrote in that book, Polling UnPacked, Gallup was lucky in getting his prediction of the magazine’s figures so close:

Gallup produced his figures six weeks before the Literary Digest survey had yet started, and the magazine’s gathering of responses for its poll lasted several weeks. Therefore, for Gallup to be so close required a large degree of luck, as the support for candidates can change over time.

Even had Gallup’s polling been perfect and his understanding of the Literary Digest’s flaws been spot on, there was no way he could be sure that reality would not change between his poll and the conclusion of that of the magazine. It was not so much a brilliant prediction as a lucky guess. Data made it an informed guess. But a lucky guess it still was, turned by luck and a fair for publicity into apparent brilliance.

Nor was Gallup the only pollster with modern methods to be polling the election. In fact, he wasn’t even the one with the best results:

He had two rivals who, like him, had developed polling expertise for business research and also applied it to politics: the Fortune survey, masterminded by Elmo Roper (whose commencement of polling for the magazine pre-dated Gallup by a few months), and Archibald Crossley, who masterminded surveys for Hearst Publications. Ironically, Crossley was formerly of the Literary Digest. He had then gone on to pioneer random sampling by telephone to generate radio audience data.

These two rivals of Gallup both also got the 1936 election result right. But Gallup, with his fair for publicity, scooped the attention despite Fortune being closer on the vote shares.

Roper was both ahead of Gallup in adopting modern polling for public consumption and his 1936 election prediction was better. But Gallup was the better self-publicist and so the myth was born.

There’s a darker side to the Gallup myth too which I dig into in more detail in the book. As academic research has shown, he presented his polls as giving the voice of America, but his methods deliberately downplayed the views of women and of Black Americans. The roots of that may have been in relative turnout at elections (with physical intimidation and legal obstacles reducing turnout among Black Americans, for example). But the down-weighting of their views went wider than that and featured in polls that were presented uncaveated as presenting the views of all Americans.

Gallup was, of course, helped by being ‘right’. His methodology was much better than the Literary Digest’s, and the 1936 US election did mark a turning point in how we try to measure public opinion ahead of an election. He provides a human interest encapsulation of a genuine change for the better. But in doing so, he’s also become a figure of myth, one that doesn’t survive checking out what the original sources really said.

Would you like to hear more about the history of political polling? Or more about the 1936 election specifically? Or would you rather I suck to the 21st century? Do hit reply and let me know.

Know other people interested in political polling?

Refer friends to sign-up to The Week in Polls too and you can get up to 6 months of free subscription to the paid-for version of this newsletter.

National voting intention polls

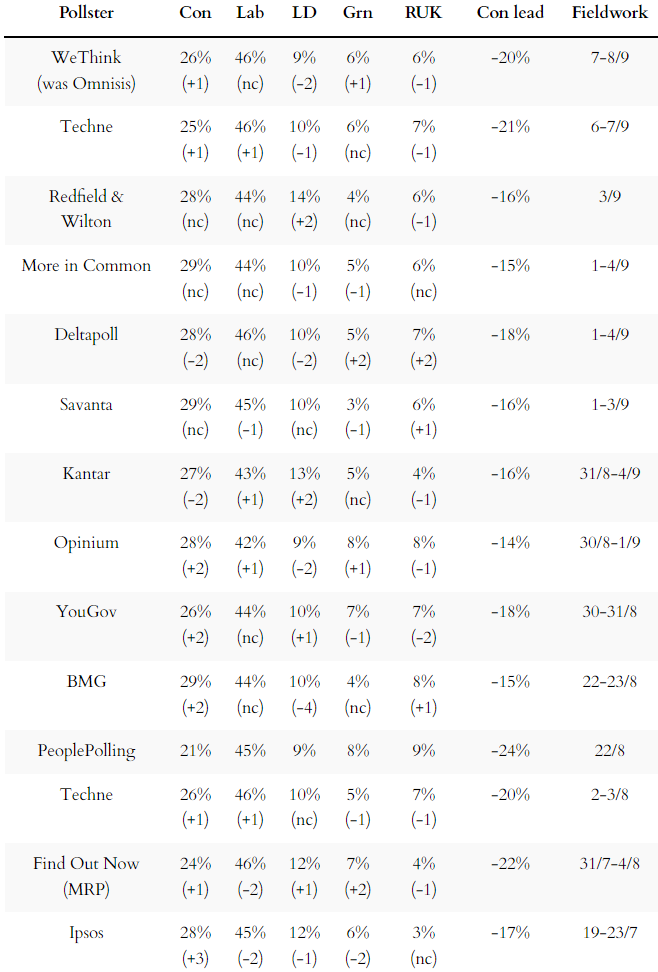

Last week saw a welcome return to voting intention polling by Kantar, one of the lower profile pollsters but also (under various names) one of the pollsters with the best records in recent elections.

It was another week without a poll putting the Conservatives on more than 30%, extending the run stretching back to late June (when a Savanta poll gave them 31%).

Here are the latest figures from each currently active pollster:

For more details and updates through the week, see my daily updated table here and for all the historic figures, including Parliamentary by-election polls, see PollBase.

Last week’s edition

What the polls (currently) say about ULEZ.

My privacy policy and related legal information is available here. Links to purchase books online are usually affiliate links which pay a commission for each sale. Please note that if you are subscribed to other email lists of mine, unsubscribing from this list will not automatically remove you from the other lists. If you wish to be removed from all lists, simply hit reply and let me know.

Starmer outpolls Corbyn in London, and other polling news

The following 10 findings from the most recent polls and analysis are for paying subscribers only, but you can sign up for a free trial to read them straight away.

Keep reading with a 7-day free trial

Subscribe to The Week in Polls to keep reading this post and get 7 days of free access to the full post archives.