Welcome to the 103rd edition of The Week in Polls, which dives deep into the world of MRPs, comparing the latest results from two of the most high profile models. It’s also time for another quarterly round-up of what the voters have been writing on their ballot papers rather than saying to pollsters.

Then it’s a look at the latest voting intention polls followed by, for paid-for subscribers, 10 insights from the last week’s polling and analysis. (If you’re a free subscriber, sign up for a free trial here to see what you’re missing.)

But first, this weeks gentle sigh of disappointment is directed at The Guardian for sending a journalist out vox popping, them finding every single person they spoke to voting for the same party… and then writing it all up as if that obviously atypical group of people somehow did give insight into what the public is thinking.

As ever, if you have any feedback or questions prompted by what follows, or spotted other recent polling you’d like to see covered, just hit reply. I personally read every response.

Been forwarded this email by someone else? Sign up to get your own copy here.

Want to know more about political polling? Get my book Polling UnPacked: the history, uses and abuses of political opinion polling.

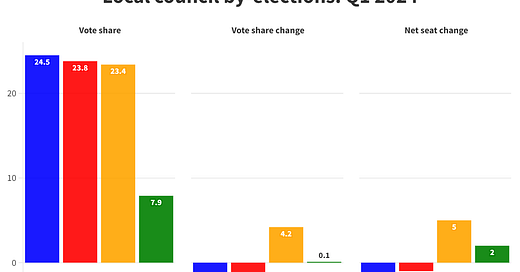

How voters have been voting

When two MRPs face-off...

MRPs continue to be the golden child of political polling. If you publish an MRP, its headlines will grab media attention and its details will be poured over with a degree of reverence usually reserved for the IFS’s number crunching on Budgets.

Unless you are a regular reader of this newsletter, of course, in which case you’ll know that MRPs are harder to get right than traditional polls, and that the results often tell us as much, or more, about the assumptions of the modellers as they do of what people have said in answer to a polling survey.

If you’re a newer reader - and especially if you’re wondering when the heck I’m going to explain what an MRP is - then my previous guide to MRPs is best read first before carrying on with this piece.

We’ve recently had new results out from probably the two most prominent MRP models around: YouGov, famous for their 2017 MRP debut,1 and Survation, prominent for now being the MRP model of choice for the high profile campaign group and tactical voting supporters, Best for Britain.2

With nearly identical fieldwork dates, this makes for a great opportunity to compare the models.

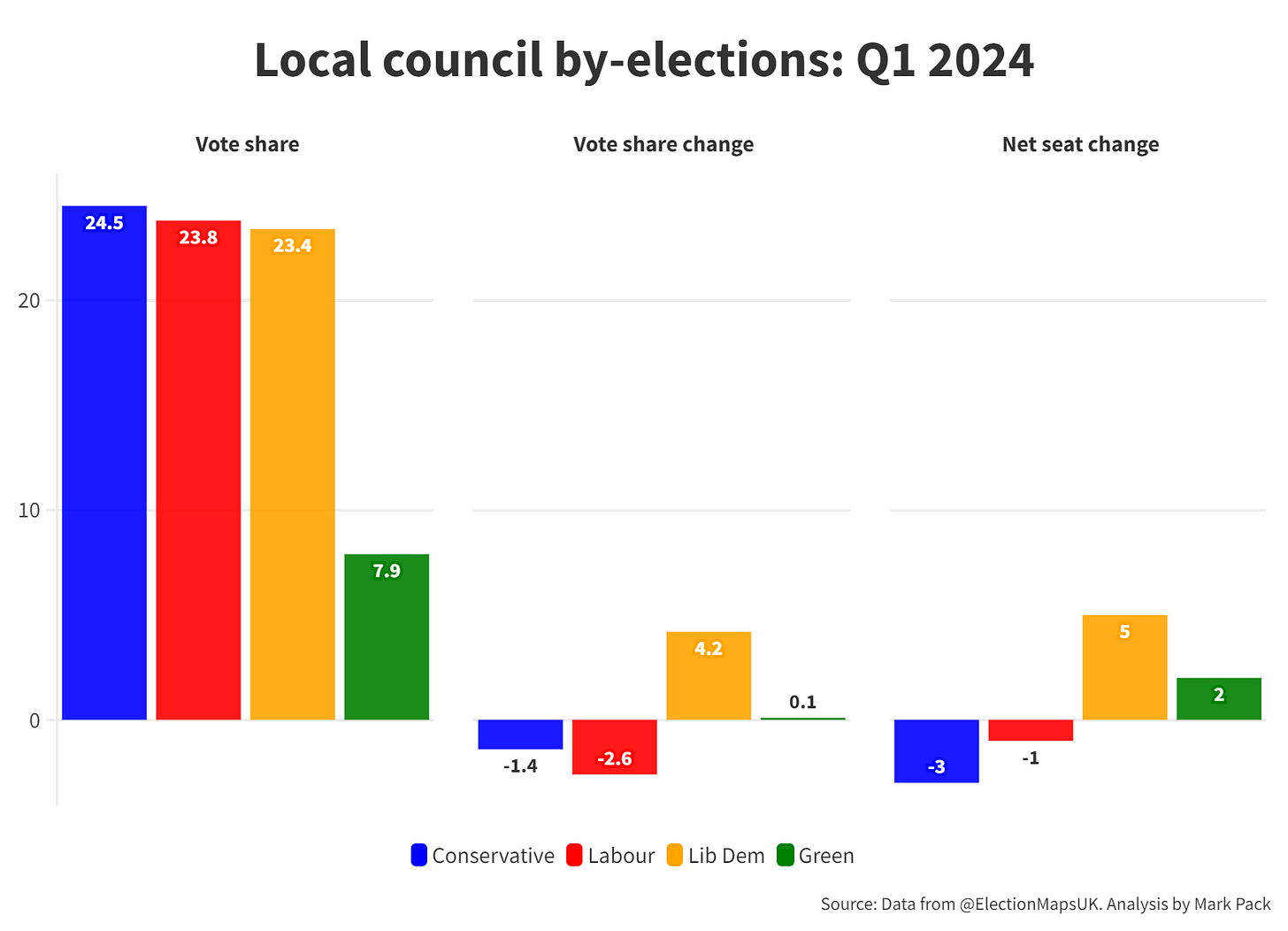

The headline vote shares

Handily, not only do the two MRPs have very similar fieldwork dates, but in both cases the same polling company has a traditional poll (Survation) or multiple polls (YouGov) from nearly the same period too.

Here is how they all compare:

It’s all pretty similar as far as the big headlines go. The Conservatives well down in the twenties, Labour in the forties and Labour’s leads not only large but clearly larger than their 1997 general election lead of 13%.

Survation’s MRP and non-MRP are nearly identical, while YouGov’s has greater variation, primarily it appears due to the different way it treats don’t know between its normal polls and its MRPs.3 But even the biggest difference is only three points (21% vs 24% for the Conservatives). That isn’t a difference large enough to get wisely excited about.

Comparing the MRP and non-MRP voting figures and getting pretty much the same answer is reassuring - two different methods both finding the same results.

It is also boring. Who needs fancy MRP if old fashioned polling gives you the same answer?

The seat numbers

The answer to that should be that MRP gives lots of interesting constituency figures. So let’s see how those compare:

Here there is more variation. The big picture is still the same: huge Labour win. But the seat numbers are very different, with YouGov giving the Conservatives half as many MPs again as Survation.4

Who is third in the House of Commons also varies between the two, with the SNP double the Lib Dems in one and the Lib Dems more than double the SNP in the other. Plaid and Greens also get rather different fates. For smaller parties - and this you might think is where the benefits of MRP should come in, being able to give us a much more nuanced figure than national vote share polls - the two MRPs tell us very different stories.

Survation has Labour doing much better than even its 418 seats in 1997.5 YouGov ‘merely’ has Labour with a majority of over 150.

Either way, that may seem such a massive majority as to be improbable… but it is also what those traditional voting intention polls are telling us at the moment too. Indeed, Survation’s founded has defended his firm’s model, pointing out how you can find even larger majorities with some of the current polls and standard votes to seats calculations.

The individual seats

What then about the individual seats? Do the seat by seat results give us lots of fun/meaningful insight, or are there patterns that should cause us to doubt one or both MRP models - or indeed MRP more generally?

For this, I have compared the MRP projections for vote share by party in each seat6 with the Thrasher and Rallings calculations of what the vote share would have been in each seat under the new Parliamentary boundaries at the 2019 general election.

Let’s start with the tails. As I’ve pointed out, and as Peter Kellner has written about, one feature of the MRP methodology is that it naturally comes out with some strange results at the tails, such as showing a party doing better in its very weakest seats. There’s a bit of regression to the mean that comes out from an MRP, and the question for the modeller is whether to believe that and embrace it or to disbelieve it and tweak the model to downplay it.

Survation are more in the former camp and YouGov in the latter, as the figures from their models clearly show.

Taking the 37 seats in which the Conservatives polled under 15% in 2019, Survation finds that on average, the Conservative vote will rise by 0.1% in them, while YouGov finds that it will fall by 1.8%. Both pollsters find the average fall across all seats for the Conservatives to be 18%.

Now clearly if you are starting on under 15%, your vote share cannot then fall by 18%. We should then expect to find that the vote share changes in the weakest seats for the Conservatives are different. But is it really plausible than in their 37 worst seats, when their national vote share is plunging, the Conservative vote will (just) go up, as Survation finds?

It’s only up by 0.1%, and so a slightly different set of figures with it down by 0.1% would have looked less surprising. So we shouldn’t read too much into it being up rather than down. Even so the difference from YouGov shows the impact of what YouGov has explained it is now doing about the weird results generated in the tails:

We have developed a new technique called ‘unwinding’. The unwinding algorithm looks at historical results and learns from them what the typical distribution of party vote shares tends to look like (for each party), and re-fits constituency-level shares in the posterior distribution to better reflect this variance. This has the effect of ‘unwinding’ the posterior distributions to better reflect the spread of constituency-level results at British general elections, and in turn reduces the proportionality of the swing.

The impact of this comes out again if you look at the seats in which Survation finds the Conservative vote will rise. There are 23 of them, all in weaker territory for the party, with the party not having got over 20% in any of them in 2019. They are generally urban and less prosperous. A few are in London, which might tempt you to look for a ULEZ-related explanation, but as most aren’t, that doesn’t get us very far into explaining why it would be these Conservative local parties that would be crowing about being the top performers:

Bethnal Green and Stepney

Birmingham Hall Green and Moseley

Birmingham Ladywood

Brighton Pavilion

Cambridge

Glasgow North

Hackney North and Stoke Newington

Hackney South and Shoreditch

Holborn and St Pancras

Hornsey and Friern Barnet

Islington North

Lewisham North

Lewisham West and East Dulwich

Liverpool Riverside

Liverpool Wavertree

Liverpool West Derby

Manchester Rusholme

Manchester Withington

Peckham

Queen's Park and Maida Vale

Sheffield Central

Tottenham

Vauxhall and Camberwell Green

While Survation finds the Conservative vote up in all of these, by 1.7% on average, YouGov with its unwinding adjustment finds the Conservative vote to be falling in these seats, by 1.8% on average. (YouGov only finds two seats in which the Conservative vote will rise, Glasgow North and Hornsey and Friern Barnet, both in the list above).

I find it instinctively pretty unlikely that Survation is right on this and YouGov wrong. With the Conservative vote share overall down by 19%, is it really going to be rising in chunks of Manchester, Liverpool and Birmingham?

A slight check on my instincts, to be fair, comes from the 1997 general election results. That’s a handy yardstick for what is plausible due to being (it looks likely) a similar general election to the next one, i.e. one in which the Conservative vote share is down massively from a win the previous time and the Labour vote share up sharply too.

Looking at the 10 best vote share changes for the Conservatives in 1997, Liverpool and Glasgow do actually feature in that list too. But in only two seats in 1997 did the Conservative vote share rise and their vote share in 1997 fell by ‘only’ 12 points. So Survation is telling us that with a much larger fall in the Conservative vote share this time (19%), their vote will be up in 23 seats rather than 2.

That does seem to be a piece of brave modelling.

But does it matter?

You could fairly say that it isn’t of much import whether Survation or YouGov is right about the Conservative vote share being up or down in a seat where it ends up being so very low anyway.

The Liberal Democrats

Let’s then turn to some figures of more interest, related to one of the biggest differences between YouGov and Survation: the Lib Dem performance. The two models produce similar national vote shares - 12% versus 10% - but very different seat numbers - 49 versus 22.

Even the 22, however, would be a doubling of the party’s 2019 result, and both vote share figures are essentially unchanged on last time. So both MRPs are suggesting a very good election result for the Lib Dems, with little net change in votes and a big increase in seats.

Against that background how plausible is it that the three worst Lib Dem results in the whole country, with massive vote share falls according to Survation, will be Bath (-17%), Twickenham (-17%) and St Albans (-18%)?

If Survation was predicting a 2015-style disaster for the Lib Dems, then clearly yes, it would be plausible. But remember their MRP is one in which the number of Lib Dem MPs doubles though vote share is static.

You might expect therefore the story to be that the Lib Dems are doing well in their strongest areas but falling back in weaker areas. But that isn’t what the Survation MRP says. Rather, it tells us that, although the Lib Dems hang on in each of these three seats, the party will be greatly losing support in its strongest areas even as it’s making dramatic moves forward in other places to gain seats.

That seems a tricky mix to explain, and one that is at odds with evidence from local elections during this Parliament where there isn’t a sign of the party’s strongest areas being where it is doing badly even while advancing overall. A tricky mix to explain, moreover, when we add two further pieces of evidence.

First, let’s dig into the St Albans example. Here is a seat which the Lib Dems gained for the first time in 2019, and so there’s the usual double-incumbency effect - the defeated part loses its incumbency bonus and the new winning party gains it. Moreover, the local election results since 2019 in St Albans (as in the other two) have been extremely good. Plus the MPs there, Daisy Cooper, is one of the relatively few Liberal Democrats who appears regularly in the national media, and she’s not had any big scandal or controversy as MP.

So against all of that - national vote share flat, MPs doubling, double-incumbency boost at play and very strong local election results - St Albans is meant to be a seat where the Lib Dems go massively backward? That is, as I said, a tricky one to explain.

All the more so if we return to the 1997 yardstick, where the Lib Dem vote share went down by 1% and the seat tally went up sharply, from 18 to 46. None of the 10 worst Lib Dem results in 1997 were in seats like the three worst performers in Survation’s poll.

None of the ten were in held seats, nor indeed were any in seats the party was targeting. In nearly all cases the party was running a very limited, at most, campaign and the list is full of places where the party had been in contention years earlier, but where the party was no longer, due to factors such as local government collapse or previous Liberal or SDP MPs having lost their seats. It’s a very different list from the Survation list of the top 10 Lib Dem declines despite the overall landscape of the two elections being so similar.7

The YouGov pattern for biggest Lib Dem vote share falls looks, overall, much more plausible to me. There are a couple of seats in there where I think there are very good chances of the party doing significantly better, but overall both in terms of the seats and the level of vote share change predicted (an average 8% fall in the 10 worst seats, compared with 15% in the 10 worst Survation ones), it’s more plausible.

Again, it looks like the Survation model’s preference for regression to the mean at the tails and for a more proportional swing than YouGov’s produces less plausible patterns when you get into the details.

Two final examples of this. One I was tempted to exclude because I am afraid I won’t given you the details for obvious reasons. I’ve taken a look in both Survation and YouGov at the group of seats in which the Lib Dems targeted hard in 2019 but are not seriously campaigning to win next time around. If the national vote share picture is flat and given that context, it’s reasonable to expect the party’s vote share to go noticeably backwards in those. In the vast majority of these, YouGov is predicting a bigger vote share decline for the Lib Dems than Survation. Survation is (wrongly, I suspect, in this case) being kinder to the party.

So the question is not about whether Survation is being ‘too mean’ to the Lib Dems overall, but rather whether it is getting the vote distribution between different seats wrong and so under-predicting the Lib Dem seat total.

Which brings us to some final examples. Seats where Labour was third in 2019 behind the Lib Dems, which Labour has not given any outward signs of targeting seriously this time around (judged by measures such as Keir Starmer visits), where the Liberal Democrats have had very good local election results since then and Labour not, and which the outward signs from the Lib Dems is of them getting serious attention from the party (judged by such measures such as Ed Davey turning up with one of his props).

I wrote about one of these in the paid-for section last time:

Take Chichester, where in 2019 the Lib Dems finished second, ahead of Labour, and in the 2023 local elections (on slightly different boundaries) the Lib Dems took control of the council with 14 net gains to go up to 25 seats while Labour was wiped out, winning no seats, a loss of 2. The Lib Dem PPC, Jess Brown-Fuller, was one of the (very few) speakers at the Lib Dem spring conference rally and Ed Davey, completely with his blue door prop, has visited Chichester recently, again one of only a handful of places to get the leader and the door.2 Labour and Keir Starmer have been doing nothing similar. Of course, parties do get where they choose to campaign and where they think they can or can’t win wrong at times. But it is still a pretty brave call to find a Labour win here.

Survation has the Conservatives holding on in Chichester, with Labour moving well ahead of the Lib Dems. YouGov has the Lib Dems winning it.

Or take the similarly named pair of Woking and Wokingham. Survation has Labour winning both, YouGov has the Lib Dems winning both with Labour third. As with Chichester, the local government election results since 2019, the travels of Ed Davey’s props department, the strategic choices of campaign visits by Keir Starmer and so on: all the public signs are that not only the Lib Dems but Labour too reckon that YouGov has it right.8

In conclusion…?

Sticking with the Liberal Democrats, here’s one chart that pulls many of these points together. It shows the distribution of constituency vote shares for the party found by each of the YouGov and Survation MRPs. As YouGov overall finds a 2 point lower vote share for the Lib Dems, in order to make the comparison clearer, 2 points have been taken off each YouGov Lib Dem figure. That moves the line up the graph but doesn’t change its shape:

What this shows us is that YouGov concentrates Lib Dem support in a smallish number of stronger seats. Hence the YouGov line is generally slightly below the Survation line, but for the strongest areas over to the right, it’s very significantly above it. Hence the much larger seat total found by YouGov.

If I had started with this graph, showing how Lib Dem support is lumpier with YouGov and smoother with Survation, you might reasonably have wondered why one should be particularly considered more likely to be right than the other. But hopefully the run-up to this, with the other examples, shows why I think - despite my own self-interest in this subject - it’s reasonable to think that the YouGov model is producing more plausible results.

Whatever your view on that, what should also be clear, I hope, is how MRPs can’t all be treated alike. Different models produce different results, and good coverage of MRPs don’t treat their seat numbers with reverence but rather gets into explaining to people how the key assumptions and design decision vary between the models, unpacking what that means for what may or may not be true about what’s happening with voters.

Some of what an MRP tells us is what the voters are saying. Some too, though, is what the modellers think is going is going to happen.

A breaking news epilogue: after I had written this piece, Chris Hanretty - an expert MRP modeller himself - published a piece defending the tendency of MRPs to produce proportional rather than uniform swings. (See my earlier MRP guide for what this issue is about.) His argument, with evidence from previous Parliamentary cycles, is that voters behave in a way that is more proportional when answering polling questions ahead of polling day and their answers change to produce more uniform swing results as polling day nears. For example, more people focus in on whether or not they will vote tactically, and the increasing prevalence of tactical voting in their answers makes the swings in the polling results more uniform and less proportional. So even if nothing changes in the model other than the polling data from voters, the Survation MRP model will produce results more in line with uniform swing as polling day nears. His piece is an important contribution to the debates over MRPs more generally. In the context of what I’ve written here, I draw the implication that Survation’s MRP results will become more like YouGov’s as polling day nears, because YouGov’s model is designed to be less proportional, and so that seems to reinforce my suggestion that in the cases I’ve looked at we’re more likely to see seat results closer to the current YouGov picture than the current Survation one.

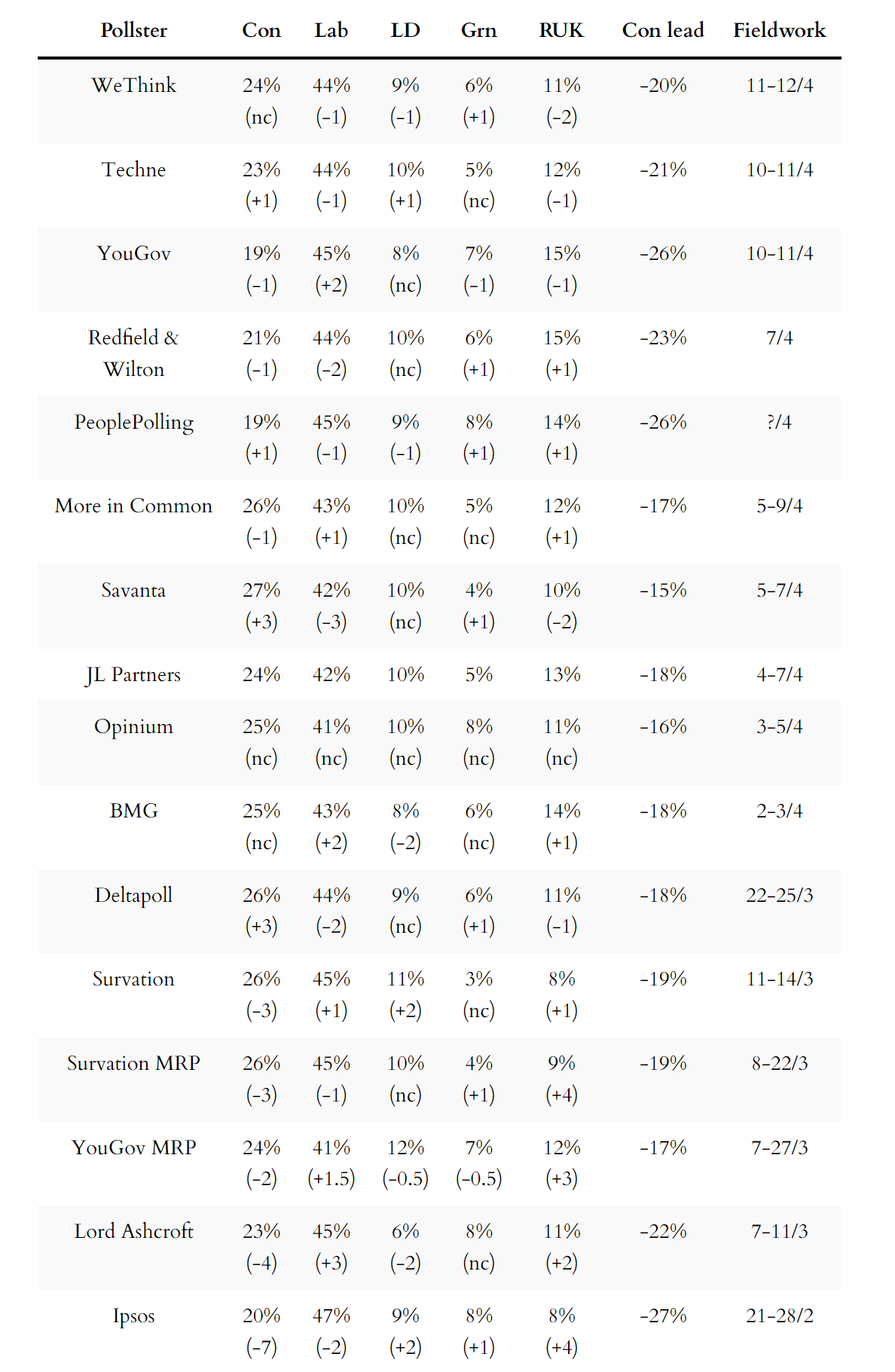

National voting intention polls

Comments about the Duke of Wellington and Michael Foot all still apply as being 15 points behind now counts as a relatively good polling result for the Conservatives:

For more details and updates through the week, see my daily updated table here and for all the historic figures, including Parliamentary by-election polls, see PollBase.

Last week’s edition

A new polling technique and new data: the latest on immigration views.

My privacy policy and related legal information is available here. Links to purchase books online are usually affiliate links which pay a commission for each sale. Please note that if you are subscribed to other email lists of mine, unsubscribing from this list will not automatically remove you from the other lists. If you wish to be removed from all lists, simply hit reply and let me know.

Where young people get political news from, and other polling news

The following 10 findings from the most recent polls and analysis are for paying subscribers only, but you can sign up for a free trial to read them straight away.

Keep reading with a 7-day free trial

Subscribe to The Week in Polls to keep reading this post and get 7 days of free access to the full post archives.