How did the pollsters do in the local elections?

Welcome to this week’s edition which is an initial look at how the pollsters and polling-based models fared in the May 2023 local elections.

Thank you for all the lovely feedback for last week’s edition, a detailed look at Matt Goodwin’s new book, Values, Voice and Virtue. I should warn you though that this has tempted me to think of other longer reads for later this year… and I’ve also repeated the format introduced last time of adding footnotes to the main story for paid-for subscribers.

As ever, if you have any feedback or questions prompted by what follows, or spotted some other recent polling you’d like to see covered, just hit reply. I personally read every response.

Been forwarded this email by someone else? Sign up to get your own copy here.

If you’d like more news about the Lib Dems specifically, sign up for my monthly Liberal Democrat Newswire.

My privacy policy and related legal information is available here. Links to purchase books online are usually affiliate links which pay a commission for each sale. Please note that if you are subscribed to other email lists of mine, unsubscribing from this list will not automatically remove you from the other lists. If you wish to be removed from all lists, simply hit reply and let me know.

How did the pollsters and modellers do in the May elections?

There are three ways of judging the polls, or models based on the polls: by votes, by seats and by winners.

Purist pollsters will often say that they should only be judged by votes as that’s what voting intention polls are designed to do (individual seat polls aside). If a pollster puts Party A on 41%, Party B on 39% and the result is Party B 40% and Party A 39%, they’d count that as a triumph - only 2 points out on Party A and also only 1 point out on Party B. Pretty close to being spot on, well within margins of error, good by historical standards and much better than an army of pundits. And yet… Party B got the most votes, not Party A.

Which is why the more pragmatic approach to judging the polls is that we’re only interested in their figures because we think they may tell us who is going to win.1 Seats, and above all who is in power after the election, is what we’re really interested in.

From this perspective, the purist pollster approach is like saying Arsenal are going to beat Liverpool 1-0 in the FA Cup Final, seeing Liverpool win 1-0 and then saying, ‘yeah, but my prediction was really good - I was within 1 goal for both sides’. Useful, perhaps, for spread betting, but otherwise rather missing the point of why we make and argue over such predictions.

Getting seats and winners right matters, even for pollsters.

That said, even in elections with dramatic landslides, it’s the norm for most seats, or councils, not to change hands. This year, for example, the Conservatives - outside of Greg Hand’s Twitter feed - got a massive drubbing. Yet control of 65% of councils did not change. To judge if a poll or a model is better than simply predicting the status quo, don’t be impressed by claims like ‘we got 75% of council control outcomes right’.

For these local elections, there’s an added complication of what we mean by “votes”. Only part of the country voted, and so there are two versions of “votes” we can think about. One is simply the aggregate total of votes cast.2 However, we're also often interested in what the local elections mean for the country as a whole or a future general election. So both John Curtice and the BBC team, and also Michael Thrasher and Colin Rallings, produce extrapolations from the areas that did vote to what that would have meant if the whole country had voted. They use different methodologies, and so come up with slightly different figures, but the patterns of both are the same.

Those national projected vote shares are therefore the best to use when talking about the state of politics and parties. However… this time around, two pollsters did local election voting intention polls just before polling day and only asked that question of people in places with local elections. Therefore, their ‘national’ vote share figures need comparing with the raw totals rather than with the Curtice/BBC or Thrasher & Rallings figures.

One other preliminary to note: at time of writing we’re still waiting for the results from one council (Redcar and Cleveland), and also there looks to have been widespread misreporting of the Wychavon result (Lib Dems won two more seats and Conservatives two less than listed by the BBC or The Guardian, for example). None of that though will change the overall picture much.

Let’s then look at how people did…

YouGov

An MRP poll covering 18 councils was used to both predict seat numbers and likely council control outcomes in those 18. Overall, YouGov got the picture right:

Both Labour and the Liberal Democrats look set to take a host of council seats, and control of a number of councils, from Tory hands.

MRP is used however to promise much more than just the broad picture, so let’s take a look at the details.

For each council, YouGov gave both a ‘note’ (e.g. ‘Modest Conservative gains’) and a ‘call’ (e.g. ‘Leaning Labour’).

Of those notes, nine were right,3 five partly right (e.g. predicting significant gains for a party that actually got modest gains)4 and four wrong (e.g. predicting modest Labour and Lib Dem gains in East Cambridgeshire though the results brought no gains for either).

That’s a pretty good score - and one that shows how the overall picture was painted correctly by YouGov.

Of the calls, it’s a more complicated picture:

2 ‘solid’ calls, one solid Conservative and one solid Labour - both correct.

11 ‘leaning’ calls, such as ‘leaning no over control’ - of these, the lean matched the outcome in six cases and missed in five. If you’re splitting things into ‘solid’ and ‘lean’ then you shouldn’t expect every ‘lean’ to go that way, so having some misses in this category is to be expected.

5 ‘too close’ calls - though three of those saw results where the seats numbers came out not particularly close.

More subjectively, those calls which turned out wrong did nonetheless often get the direction right. For example, Chichester saw a dramatic Lib Dem gain (+14 seats to get majority control with a 25-11 majority). So the ‘too close’ call was in one sense wrong - it was a landslide, not a nailbiter - but in another sense it was right, i.e. that a formerly strong Conservative position was facing a big threat.

Similarly, in many cases the vote share changes were in the direction indicated even if the seat numbers didn’t end up that way. (The way ‘calls’ are usually presented, such as in American elections, and the way YouGov wrote-up the polls, I think it’s fair to judge their calls versus seats. However, I’ve since clarified with YouGov that their descriptions were intended to capture vote movements too, so when all the vote totals are crunched their ‘calls’ may come out better.)

Overall, I think it’s fair to say this was an interesting and in some respects promising development in using MRP for British elections. It got the big picture right - and so once again showed the value of polling over poll-free punditry in understanding what’s going on - and it got the direction of political movement right in many places. But, it struggled more at picking up how far that movement was going, especially where the movement was coming from a party bucking national trends.

Many thanks to Patrick English from YouGov for answering far too many questions from me, including on a Sunday.

Electoral Calculus

Electoral Calculus also had an MRP model, based on aggregated poll figures rather than dedicated extra polling.

I think it’s fair to say this wasn’t a happy set of predictions, even though the updated version released just before polling day was slightly better.

Even with the updated figures, the basic story was wrong for the Greens, the Lib Dems and the Conservatives. Nor were things much better on council numbers, with Electoral Calculus predicting no net council gains for the Lib Dems or the Greens, compared with +12 and +1 in reality, and also significantly under-estimating Labour, predicting +8 rather than the actual +22.

(One caveat to note - as the table shows, last year’s predictions were also not great. However the underlying vote share figures were pretty good and so the issue was the modelling rather than the polling. We’ll have to see whether that’s the same again this time.)

The professors

As well as polling based predictions, we also had some sort-of predictions from two professors usually well worth listening to on electoral statistics.

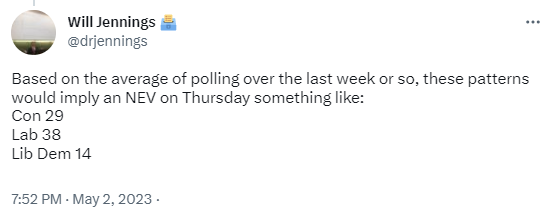

First, Will Jennings based on the historic correlation between poll ratings and projected national vote share at local elections:

NEV is the Thrasher and Rallings national vote share projection, which has come out as Conservative 29%, Labour 36%, Lib Dem 18% - so within a point or two for both Labour and the Conservatives, but more out on the Lib Dems. A good example of how these simple correlations can be used to get a decent sense of what might happen.

The other professor was Stephen Fisher, whose pre-election write-up was Labour to gain 400 seats, Lib Dems to gain 20, others to gain 70 and the Conservatives to lose 490.

However, that was no so much a firm prediction as an intellectual exploration of benchmarks because, as he wrote:

In addition to [several] unusual factors, there are the usual reasons why poll swings do not necessarily translate into local election outcomes, especially local politics and selective candidature. Nonetheless, I have applied my usual local election seats forecasting models regardless. If nothing else it is interesting to consider the extent to which local election results do track opinion polls, and analysis of the discrepancies is instructive.

A wise caveat as it turned out because those figures were less good, missing the stories of both the extent of the Conservative losses (over 1,000) and of the scale of the Lib Dem gains (over 400).

He did to be fair add:

The Liberal Democrats have tended to overperform the model since the coalition government ended in 2015. If that tendency continues this year, the Liberal Democrats should be more likely to make net gains of around +240 seats. Even though continued overperformance relative to the basic model seems reasonable this year, it cannot work forever. So I have declined to adjust the model in a way that implies the party will outperform the polls indefinitely.

I think Lib Dems will view that as a challenge, Stephen - and indeed the general pattern across all the polls and models is that, at least for the last few years, they aren’t good at picking up with the Lib Dems are doing well. If the party continues to be on the up, that’s going to continue to be a challenge - especially as the Lib Dems doing well also, almost always, means one of the two big parties doing poorly. So failing to pick out Lib Dem over-performance isn’t only about the Lib Dems. It’s also about the picture for other parties too.

The other pollsters

Both Omnisis and Survation had local election voting intention polls out just before polling day.

In both cases, they only polled people in areas with local elections. So their figures need comparing with the raw vote totals rather than with the Curtice/BBC or Thrasher & Rallings projections.

Those raw vote totals are still being compiled, so I’ll return to how their polls did in a future edition.

However, on first look Survation got the broad picture right. They had Labour up 6 points, Conservatives down 8 points, Lib Dems up 1 point and Greens up 2 points. It may be their Labour increase will turn out to have been over-stated, but the story overall looked right.

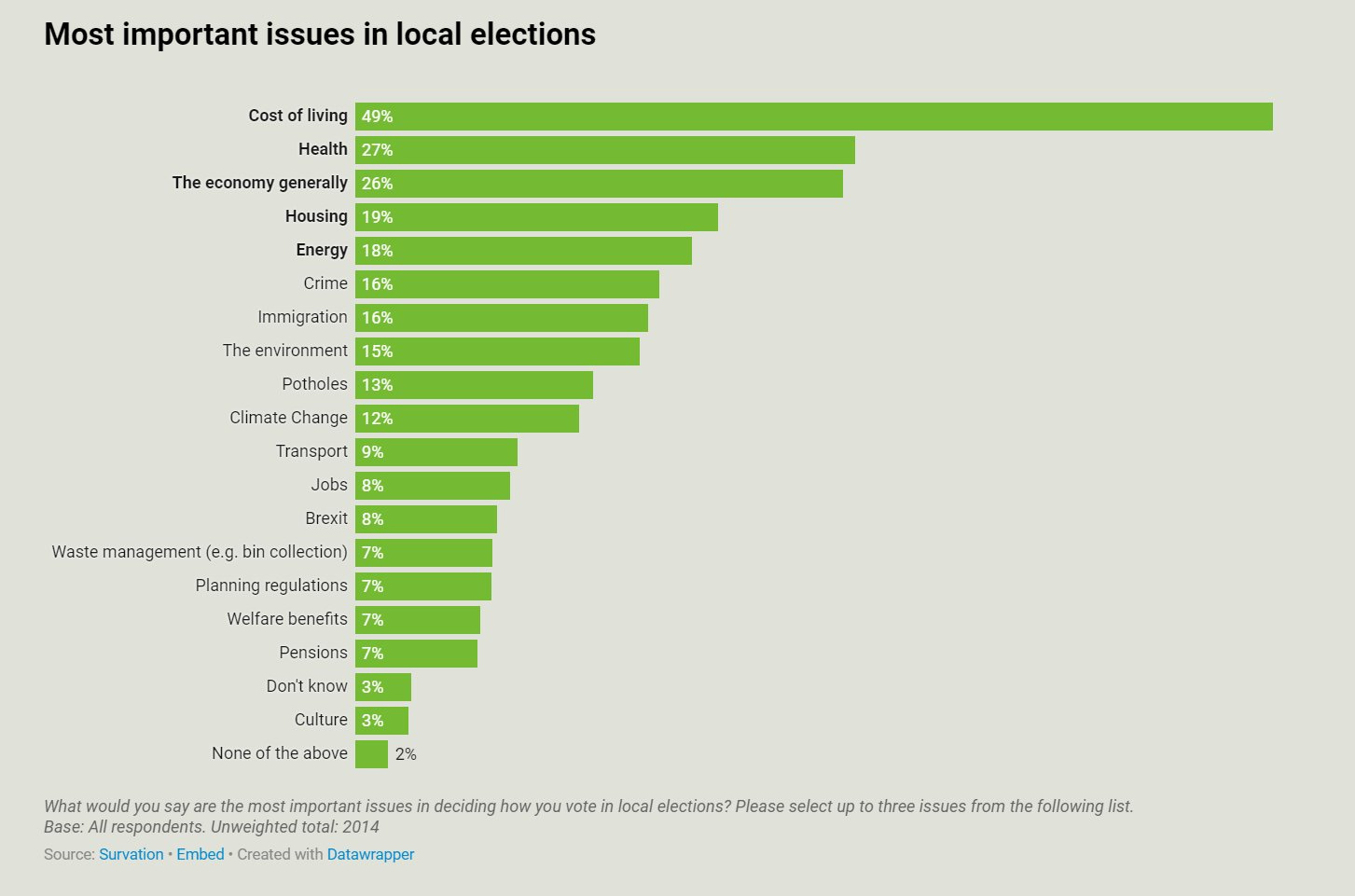

Note also what they found on what issues matter: this is an issues list that you’d expect to lead to a bad Conservative result.

Omnisis also looked to get the broad picture right (and that’s despite the huge over-reporting of likelihood to vote in their sample): Labour well ahead of Conservatives, a Lib Dem showing well above national voting figures, a good Green showing and - as with all the above - no surge for Reform.

More on both when we’ve got the vote totals to compare with properly.

National voting intention polls

Here’s the latest from each currently active pollster:

After the small recovery in Conservative fortunes, things have settled down to a still-large Labour lead. We’ll have to see what impact the local elections make on this but it’s unlikely to revive that Conservative trend. They are, as I’ve written so many times before, still in a big hole.

For more details and updates through the week, see my daily updated table here.

Last week’s edition

What to make of Matt Goodwin's controversial book?

Know other people interested in political polling?

Views on the monarchy, and other insights from this week’s polling…

Keep reading with a 7-day free trial

Subscribe to The Week in Polls to keep reading this post and get 7 days of free access to the full post archives.